3 Data Problems That Can Be Solved With Data Observability

Collecting more data doesn’t necessarily lead to better analytics and insights.

Gartner predicts that only 20% of data and analytics will result in real business outcomes. If enterprises want to be more successful with their data and analytic initiatives, they need to address deeply entrenched data problems such as data silos, inaccessible data/ analytics, and over-reliance on manual interventions.

To achieve successful digital transformation, data teams need to go beyond cleaning incomplete and duplicate data records. A data observability solution like Acceldata can help data teams avoid data silos, make data analytics accessible across the organization and achieve better business outcomes. Data teams can also use Acceldata to leverage AI to automatically detect anomalies.

1. Data Silos Within Your Enterprise

Today, enterprises are overwhelmed with data. Some organizations collect more data in a single week than they used to collect in an entire year, said Rohit Choudhary, CEO of Acceldata, in an interview with Datatechvibe. So, teams are increasingly using more data tools and technologies to meet the data needs of their organization.

As a result, data silos have become the norm. These islands of isolated data create data integrity problems, increase analysis costs, and distrust in the data. Data silos also create more work for data teams, forcing them to stitch together fragile data pipelines across different data platforms and technologies.

Use Acceldata Torch to get a single, unified view of your data and data lifecycle

Data observability can help you avoid data silos by offering a centralized view of your entire data pipeline. Such a view shows how your data gets transformed across the entire data life cycle. More specifically, Acceldata Torch offers a unified view of your data pipeline and data-related operations to help you avoid silos.

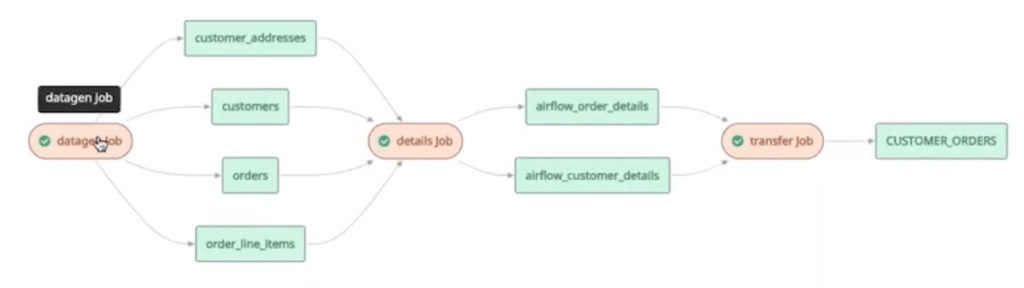

Here is a typical data pipeline in Airflow. It shows how a dataset gets created and written to an RDS location (a remote database) after a JOIN operation. After that, the data gets transformed using a Databricks job and finally, the data is moved into a Snowflake repository for consumption.

And here’s the same pipeline in Acceldata. Red boxes represent various compute jobs, while the green boxes represent the various data elements, locations and tables that interact with the compute jobs.

Such a unified view helps data teams take a step back and understand how data gets transformed across the entire data life cycle, irrespective of the platforms used. It also helps them spot potential pipeline problems, and debug any data transformation mismatches or problems.

2. Poor Quality Plus Inaccessible Data and Analytics

A Harvard Business Review survey states that poor data quality (42%), lack of effective processes to generate analysis (40%), and inaccessible data (37%) are the biggest obstacles to generating actionable insights. In this Venture Beat article, Deborah Leff the CTO for data science and AI at IBM says, “I’ve had data teams look me in the face and say we could do that project, but we can’t get access to the data”. In other words, enterprises can’t get actionable insights unless data and analytics are accessible at all levels, within the organization.

Not having a unified view of the entire data life cycle can result in inconsistencies that affect the quality of data. Also, there is a paradox where enterprises continue to collect, store, and analyze more data than ever before. But at the same time, processing and analyzing data is becoming more costly and skill-intensive.

As a result, data and analytic capabilities are not readily accessible for consumption and analysis, at all levels, within an organization. Instead, only a few people with the necessary skills and access are able to use small bits of data. This means that enterprises don’t realize the full potential and value of their data.

Use Acceldata Pulse to lower data handling costs and enable real-time analysis

For most enterprises, high data handling costs and outdated processes prevent them from making data and analytics accessible at all levels within their organization. They can use Acceldata Pulse to:

- Make the data and infra layers more observable by creating alerts that monitor key modules of your infrastructure components such as CPU, memory, database health, and HDFS.

- Accelerate data consumption by helping data teams to identify bottlenecks, excess overheads, and optimize queries. It also helps data teams improve data pipeline reliability, optimize HDFS performance, consolidate Kafka clusters and reduce overall data costs.

- Enable real-time decision-making at all levels within your organization.

3. Relying Only on Manual Data Interventions

Today, data teams rely on manual interventions to debug problems, detect anomalies and write queries/scripts to prepare raw data for downstream consumption/analytics. But this approach isn’t scalable, nor can it help your data teams deal with the increasing volume of data. So, data teams need to leverage AI and automation.

But implementing AI-based automation is a complex problem. “This is a new period in the world’s history. We build models and machines in AI that are more complicated than we can understand”, says Jason Yosinski, co-founder of Uber AI Labs.

As a result, two-thirds of companies invest more than $50 million every year into Big Data and AI, but only 14.6% of companies have deployed AI capabilities into production.

To make matters worse, enterprises overload data teams with repetitive manual tasks such as cleaning datasets, debugging errors and fixing data outages. This makes it impossible for them to leverage AI and automation.

Leverage AI to automatically clean data, detect anomalies, and prevent outages

Leverage AI capabilities using a data observability solution such as Acceldata Pulse to:

- Automatically clean and validate incoming data streams in real-time so data teams no longer need to write time-consuming manual scripts and can focus on optimizing infra and ensuring reliability.

- Automatically detects anomalies and automates preventive maintenance. It also accelerates root cause analysis and correlates events based on historical comparisons, environment health, resource contention

- Automatically analyze the root cause of unexpected behaviour changes by:

- Getting an overview of all application logs as a time histogram, searchable by severity or service.

- Comparing different queries and their runtime/ configuration parameters

- Getting a better understanding of queue utilization for different queries.

- Getting automatic recommendations to rectify slow queries, predict resource availability and size containers appropriately.

Data Observability is Levelling the Playing Field

The top tech companies can afford to hire scores of talented data executives and engineers to wrangle business outcomes out of their data and analytic initiatives. But most companies in the Fortune 2000 group can’t follow this same template. However, they can still get better business outcomes from their data and analytics.

The Acceldata platform helps small data teams to punch above their weight by automating repetitive manual tasks such as cleaning data and detecting anomalies. It also extends a data team’s analytic capabilities by making the data and infrastructure layers more observable.

“More companies need to be successful with their data initiatives — not just a handful of large, internet-focused companies. We’re trying to level this playing field through data observability,” says Choudhary.

Request a free demo to understand how Acceldata can help your enterprise succeed with its data initiatives.

Note: This article was ghost-written for Acceldata as part of my consulting services with Animalz and was published on Acceldata’s blog.